Exploring OpenShift with CRC

Date: 2024-01-14

OpenShift Container Platform (OCP), otherwise known as just OpenShift, is a comprehensive, feature-complete enterprise PaaS offering by Red Hat built on top of Kubernetes, available both as a fully managed service on popular public cloud platforms such as AWS (ROSA) and as an internal developer platform (IDP) to be deployed on-premises on existing private cloud infrastructure, as VMs or on bare metal.

Compared to Kubernetes, OpenShift:

- Is an out-of-the-box IDP as opposed to an infrastructure layer for building your own IDP

- Places a strong emphasis on the developer experience and enhancing developer productivity

- Officially supports automated provisioning of infrastructure on supported platforms as part of the cluster creation process, as opposed to manual cluster creation with kubeadm (optionally) relying on third party tooling and integration for automation

- Is an open-core commercial offering, as opposed to a fully open source project

- Is opinionated, as opposed to flexible and customizable

However, due to its comprehensive feature set and extensive automation, a production-grade OpenShift cluster has non-trivial resource requirements, making it out of reach for individual developers and home lab enthusiasts wanting to try out OpenShift locally or on commodity hardware such as various editions of the Raspberry Pi.

Fortunately, just as projects like kind and Minikube enable developers to spin up a local Kubernetes environment in no time, CRC, also known as OpenShift Local and a recursive acronym for “CRC - Runs Containers”, offers developers a local OpenShift environment by means of a pre-configured VM similar to how Minikube works under the hood.

In the lab to follow, we will provision a single-node OpenShift cluster using CRC, convince ourselves that OpenShift is in fact Kubernetes (but not just) and briefly explore what OpenShift has to offer. The good news - all you need is your own laptop (or desktop / workstation) to follow through!

Lab: Provisioning a single-node OKD cluster using CRC

OKD is the community distribution of Kubernetes that powers Red Hat OpenShift and is available under the permissive Apache 2.0 open source license, in the same spirit as upstream Kubernetes. It is also known as OpenShift Origin and rumors are that its acronym stands for “Origin Kubernetes Distribution”, though you will not find this anywhere in the official documentation. Apart from the lack of commercial support and access to Red Hat certified Operators (more on that later), OKD is otherwise functionally identical to OCP which is the commercial version of OpenShift.

While CRC does support the creation of a local OCP cluster, we will stick to OKD since (1) it allows us to focus on the open source ecosystem around OpenShift and (2) we won’t be getting any commercial support from Red Hat for OpenShift Local anyway :-P

For the sake of simplicity, we will refer to OKD as simply OpenShift going forward where the context allow, except when it might lead to confusion with the commercial edition (OCP).

Prerequisites

A basic understanding of Kubernetes is assumed. If not, consider enrolling in LFS158x: Introduction to Kubernetes, a self-paced online course offered by the Linux Foundation on edX at no cost.

Setting up your environment

You’ll need a laptop (or desktop / workstation) fulfilling the minimum resource requirements outlined below:

- 4 physical CPU cores (8 vCPU cores)

- 16 GiB of RAM

- At least 35 GiB of available storage

Being a feature-complete IDP, OpenShift requires more resources compared to Kubernetes so unfortunately a decade-old laptop with only 4 GiB of RAM or a MacBook Air will not suffice (-:

All the major desktop operating systems are supported, including Windows, macOS and (popular distributions of) Linux, though note that some commands might require minor modification on Windows.

Now download the latest version of CRC and place it under your PATH - for example, on Linux, one might run the following commands:

wget https://developers.redhat.com/content-gateway/file/pub/openshift-v4/clients/crc/2.31.0/crc-linux-amd64.tar.xz

tar xvf crc-linux-amd64.tar.xz

mkdir -p "$HOME/.local/bin/"

install crc-linux-2.31.0-amd64/crc "$HOME/.local/bin/crc"

Verify the installation now:

crc version

Sample output:

CRC version: 2.31.0+6d23b6

OpenShift version: 4.14.7

Podman version: 4.4.4

Spinning up an OpenShift Local cluster

Set OKD as the desired OpenShift distribution:

crc config set preset okd

Now run the setup command, which may take a few minutes on initial execution:

crc setup

If crc setup exits with an error, it should tell you what dependencies you should manually install and/or configure. In that case, follow the instructions provided in the error message to resolve the issue and try again.

Now spin up our OpenShift cluster, which may take some 15-30 minutes - grab a cup of coffee tea and wait patiently while the cluster bootstraps and stabilizes itself:

crc start

Sample output (kubeadmin password elided for security reasons):

INFO Using bundle path /home/donaldsebleung/.crc/cache/crc_okd_libvirt_4.14.0-0.okd-2023-12-01-225814_amd64.crcbundle

INFO Checking if running as non-root

INFO Checking if running inside WSL2

INFO Checking if crc-admin-helper executable is cached

INFO Checking if running on a supported CPU architecture

INFO Checking if crc executable symlink exists

INFO Checking minimum RAM requirements

INFO Checking if Virtualization is enabled

INFO Checking if KVM is enabled

INFO Checking if libvirt is installed

INFO Checking if user is part of libvirt group

INFO Checking if active user/process is currently part of the libvirt group

INFO Checking if libvirt daemon is running

INFO Checking if a supported libvirt version is installed

INFO Checking if crc-driver-libvirt is installed

INFO Checking crc daemon systemd socket units

INFO Checking if systemd-networkd is running

INFO Checking if NetworkManager is installed

INFO Checking if NetworkManager service is running

INFO Checking if dnsmasq configurations file exist for NetworkManager

INFO Checking if the systemd-resolved service is running

INFO Checking if /etc/NetworkManager/dispatcher.d/99-crc.sh exists

INFO Checking if libvirt 'crc' network is available

INFO Checking if libvirt 'crc' network is active

INFO Loading bundle: crc_okd_libvirt_4.14.0-0.okd-2023-12-01-225814_amd64...

INFO Creating CRC VM for OKD 4.14.0-0.okd-2023-12-01-225814...

INFO Generating new SSH key pair...

INFO Generating new password for the kubeadmin user

INFO Starting CRC VM for okd 4.14.0-0.okd-2023-12-01-225814...

INFO CRC instance is running with IP 192.168.130.11

INFO CRC VM is running

INFO Updating authorized keys...

INFO Configuring shared directories

INFO Check internal and public DNS query...

INFO Check DNS query from host...

INFO Verifying validity of the kubelet certificates...

INFO Starting kubelet service

INFO Kubelet client certificate has expired, renewing it... [will take up to 8 minutes]

INFO Kubelet serving certificate has expired, waiting for automatic renewal... [will take up to 8 minutes]

INFO Waiting for kube-apiserver availability... [takes around 2min]

INFO Adding user's pull secret to the cluster...

INFO Updating SSH key to machine config resource...

INFO Waiting until the user's pull secret is written to the instance disk...

INFO Changing the password for the kubeadmin user

INFO Updating cluster ID...

INFO Updating root CA cert to admin-kubeconfig-client-ca configmap...

INFO Starting okd instance... [waiting for the cluster to stabilize]

INFO 2 operators are progressing: kube-apiserver, openshift-controller-manager

INFO 2 operators are progressing: kube-apiserver, openshift-controller-manager

INFO Operator kube-apiserver is progressing

INFO Operator kube-apiserver is progressing

INFO Operator authentication is not yet available

INFO Operator authentication is not yet available

INFO Operator authentication is degraded

INFO All operators are available. Ensuring stability...

INFO Operators are stable (2/3)...

INFO Operators are stable (3/3)...

INFO Adding crc-admin and crc-developer contexts to kubeconfig...

Started the OpenShift cluster.

The server is accessible via web console at:

https://console-openshift-console.apps-crc.testing

Log in as administrator:

Username: kubeadmin

Password: XXXXX-XXXXX-XXXXX-XXXXX

Log in as user:

Username: developer

Password: developer

Use the 'oc' command line interface:

$ eval $(crc oc-env)

$ oc login -u developer https://api.crc.testing:6443

NOTE:

This cluster was built from OKD - The Community Distribution of Kubernetes that powers Red Hat OpenShift.

If you find an issue, please report it at https://github.com/openshift/okd

Congratulations - you now have a working OpenShift cluster running locally! It’s time to explore our newly created OpenShift environment :-)

Take note of the kubeadmin password shown above as we will be using it later.

Exploring our cluster with the kubectloc command line

kubectloc is the official command-line tool for interacting with OpenShift clusters, much like kubectl is to Kubernetes.

While you can fetch a copy of oc binary from the public OpenShift mirrors, CRC comes with a bundled version of oc itself - just evaluate the output of crc oc-env to start using oc:

eval $(crc oc-env)

Let’s check which version of oc and OpenShift is installed:

oc version

Sample output:

Client Version: 4.14.0-0.okd-2023-12-01-225814

Kustomize Version: v5.0.1

Server Version: 4.14.0-0.okd-2023-12-01-225814

Kubernetes Version: v1.27.1-3443+19254894103e33-dirty

We see that our OpenShift client and server versions are at 4.14, corresponding to a Kubernetes version of 1.27. As mentioned earlier, OpenShift is Kubernetes at its core, but not just ;-)

Let’s play with a few oc commands to really convince ourselves that OpenShift is Kubernetes.

View the nodes in our cluster - there should be only 1:

oc get nodes

Sample output:

NAME STATUS ROLES AGE VERSION

crc-d4g2s-master-0 Ready control-plane,master,worker 40d v1.27.6+d548052

Let’s take a closer look at our node - your node name may be different so substitue crc-d4g2s-master-0 with the name reported in your terminal output in the previous step:

oc describe node crc-d4g2s-master-0

Sample output:

Name: crc-d4g2s-master-0

Roles: control-plane,master,worker

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/arch=amd64

kubernetes.io/hostname=crc-d4g2s-master-0

kubernetes.io/os=linux

node-role.kubernetes.io/control-plane=

node-role.kubernetes.io/master=

node-role.kubernetes.io/worker=

node.openshift.io/os_id=fedora

topology.hostpath.csi/node=crc-d4g2s-master-0

Annotations: csi.volume.kubernetes.io/nodeid: {"kubevirt.io.hostpath-provisioner":"crc-d4g2s-master-0"}

machine.openshift.io/machine: openshift-machine-api/crc-d4g2s-master-0

machineconfiguration.openshift.io/controlPlaneTopology: SingleReplica

machineconfiguration.openshift.io/currentConfig: rendered-master-937128c862a772f4cab37531e27977f6

machineconfiguration.openshift.io/desiredConfig: rendered-master-937128c862a772f4cab37531e27977f6

machineconfiguration.openshift.io/desiredDrain: uncordon-rendered-master-937128c862a772f4cab37531e27977f6

machineconfiguration.openshift.io/lastAppliedDrain: uncordon-rendered-master-937128c862a772f4cab37531e27977f6

machineconfiguration.openshift.io/lastSyncedControllerConfigResourceVersion: 34100

machineconfiguration.openshift.io/reason:

machineconfiguration.openshift.io/state: Done

volumes.kubernetes.io/controller-managed-attach-detach: true

CreationTimestamp: Sun, 03 Dec 2023 12:54:31 +0800

Taints: <none>

Unschedulable: false

Lease:

HolderIdentity: crc-d4g2s-master-0

AcquireTime: <unset>

RenewTime: Fri, 12 Jan 2024 22:56:16 +0800

Conditions:

Type Status LastHeartbeatTime LastTransitionTime Reason Message

---- ------ ----------------- ------------------ ------ -------

MemoryPressure False Fri, 12 Jan 2024 22:52:54 +0800 Fri, 12 Jan 2024 22:37:46 +0800 KubeletHasSufficientMemory kubelet has sufficient memory available

DiskPressure False Fri, 12 Jan 2024 22:52:54 +0800 Fri, 12 Jan 2024 22:37:46 +0800 KubeletHasNoDiskPressure kubelet has no disk pressure

PIDPressure False Fri, 12 Jan 2024 22:52:54 +0800 Fri, 12 Jan 2024 22:37:46 +0800 KubeletHasSufficientPID kubelet has sufficient PID available

Ready True Fri, 12 Jan 2024 22:52:54 +0800 Fri, 12 Jan 2024 22:38:58 +0800 KubeletReady kubelet is posting ready status

Addresses:

InternalIP: 192.168.126.11

Hostname: crc-d4g2s-master-0

Capacity:

cpu: 4

ephemeral-storage: 31914988Ki

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 9137272Ki

pods: 250

Allocatable:

cpu: 3800m

ephemeral-storage: 29045851293

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 8676472Ki

pods: 250

System Info:

Machine ID: 57cc67c317aa4a1d92b0d8b9a789be8c

System UUID: b7504a6e-aafd-4203-964b-a3c196e3b82a

Boot ID: 9adc8a51-44a3-4ab5-a963-8acf742b7db9

Kernel Version: 6.5.5-200.fc38.x86_64

OS Image: Fedora CoreOS 38.20231027.3.2

Operating System: linux

Architecture: amd64

Container Runtime Version: cri-o://1.27.0

Kubelet Version: v1.27.6+d548052

Kube-Proxy Version: v1.27.6+d548052

Non-terminated Pods: (60 in total)

Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits Age

--------- ---- ------------ ---------- --------------- ------------- ---

hostpath-provisioner csi-hostpathplugin-l756x 0 (0%) 0 (0%) 0 (0%) 0 (0%) 39d

openshift-apiserver-operator openshift-apiserver-operator-6fb8688846-8zzt9 10m (0%) 0 (0%) 50Mi (0%) 0 (0%) 40d

openshift-apiserver apiserver-678745575d-s8gg2 110m (2%) 0 (0%) 250Mi (2%) 0 (0%) 13m

openshift-authentication-operator authentication-operator-6667569b8d-2qfhd 20m (0%) 0 (0%) 200Mi (2%) 0 (0%) 40d

openshift-authentication oauth-openshift-579b5549b5-2vqfr 10m (0%) 0 (0%) 50Mi (0%) 0 (0%) 11m

openshift-cluster-machine-approver machine-approver-865888d45-qbqqb 20m (0%) 0 (0%) 70Mi (0%) 0 (0%) 40d

openshift-cluster-samples-operator cluster-samples-operator-5f7d584d58-z7m4q 20m (0%) 0 (0%) 100Mi (1%) 0 (0%) 40d

openshift-cluster-version cluster-version-operator-7cc7d6cc78-cvf5x 20m (0%) 0 (0%) 50Mi (0%) 0 (0%) 40d

openshift-config-operator openshift-config-operator-77dd576b98-llq4n 10m (0%) 0 (0%) 50Mi (0%) 0 (0%) 40d

openshift-console-operator console-operator-555f64dfdd-9k8td 20m (0%) 0 (0%) 200Mi (2%) 0 (0%) 40d

openshift-console console-6679fcfc67-ttv8j 10m (0%) 0 (0%) 100Mi (1%) 0 (0%) 40d

openshift-console downloads-557648459f-xwnrb 10m (0%) 0 (0%) 50Mi (0%) 0 (0%) 40d

openshift-controller-manager-operator openshift-controller-manager-operator-699898cf9c-vfp2t 10m (0%) 0 (0%) 50Mi (0%) 0 (0%) 40d

openshift-controller-manager controller-manager-84b6b4d58f-9xs6q 100m (2%) 0 (0%) 100Mi (1%) 0 (0%) 12m

openshift-dns-operator dns-operator-6d8b574c64-95wgs 20m (0%) 0 (0%) 69Mi (0%) 0 (0%) 40d

openshift-dns dns-default-l9sgb 60m (1%) 0 (0%) 110Mi (1%) 0 (0%) 40d

openshift-dns node-resolver-f6sdd 5m (0%) 0 (0%) 21Mi (0%) 0 (0%) 40d

openshift-etcd-operator etcd-operator-7885b9b76f-pc45h 10m (0%) 0 (0%) 50Mi (0%) 0 (0%) 40d

openshift-etcd etcd-crc-d4g2s-master-0 360m (9%) 0 (0%) 910Mi (10%) 0 (0%) 40d

openshift-image-registry cluster-image-registry-operator-79775695c6-ph9gw 10m (0%) 0 (0%) 50Mi (0%) 0 (0%) 40d

openshift-image-registry image-registry-6cf94f6cf6-d6t82 100m (2%) 0 (0%) 256Mi (3%) 0 (0%) 12m

openshift-image-registry node-ca-k5w4n 10m (0%) 0 (0%) 10Mi (0%) 0 (0%) 40d

openshift-ingress-canary ingress-canary-mnzrz 10m (0%) 0 (0%) 20Mi (0%) 0 (0%) 40d

openshift-ingress-operator ingress-operator-6f4d57d459-bpkb9 20m (0%) 0 (0%) 96Mi (1%) 0 (0%) 40d

openshift-ingress router-default-5c59975b6f-gcths 100m (2%) 0 (0%) 256Mi (3%) 0 (0%) 40d

openshift-kube-apiserver-operator kube-apiserver-operator-9c4dc788b-687gt 10m (0%) 0 (0%) 50Mi (0%) 0 (0%) 40d

openshift-kube-apiserver kube-apiserver-crc-d4g2s-master-0 290m (7%) 0 (0%) 1224Mi (14%) 0 (0%) 10m

openshift-kube-controller-manager-operator kube-controller-manager-operator-6d696d7f5b-vlxh8 10m (0%) 0 (0%) 50Mi (0%) 0 (0%) 40d

openshift-kube-controller-manager kube-controller-manager-crc-d4g2s-master-0 80m (2%) 0 (0%) 500Mi (5%) 0 (0%) 40d

openshift-kube-scheduler-operator openshift-kube-scheduler-operator-6967cf8888-s7l9p 10m (0%) 0 (0%) 50Mi (0%) 0 (0%) 40d

openshift-kube-scheduler openshift-kube-scheduler-crc-d4g2s-master-0 25m (0%) 0 (0%) 150Mi (1%) 0 (0%) 40d

openshift-kube-storage-version-migrator-operator kube-storage-version-migrator-operator-7f56f455c7-9qm2x 10m (0%) 0 (0%) 50Mi (0%) 0 (0%) 40d

openshift-kube-storage-version-migrator migrator-54754c959-rpbdh 10m (0%) 0 (0%) 200Mi (2%) 0 (0%) 40d

openshift-machine-api control-plane-machine-set-operator-7d8877fb45-bfkcd 10m (0%) 0 (0%) 50Mi (0%) 0 (0%) 40d

openshift-machine-api machine-api-controllers-548c55cccd-x45wq 70m (1%) 0 (0%) 140Mi (1%) 0 (0%) 40d

openshift-machine-api machine-api-operator-659b96dd6f-b2pzw 20m (0%) 0 (0%) 70Mi (0%) 0 (0%) 40d

openshift-machine-config-operator machine-config-controller-794b56d4dc-xk7jc 40m (1%) 0 (0%) 100Mi (1%) 0 (0%) 40d

openshift-machine-config-operator machine-config-daemon-qgct8 40m (1%) 0 (0%) 100Mi (1%) 0 (0%) 40d

openshift-machine-config-operator machine-config-operator-84dc94fd78-9x5wj 40m (1%) 0 (0%) 100Mi (1%) 0 (0%) 40d

openshift-machine-config-operator machine-config-server-cvrfx 20m (0%) 0 (0%) 50Mi (0%) 0 (0%) 40d

openshift-marketplace community-operators-ccvhg 10m (0%) 0 (0%) 50Mi (0%) 0 (0%) 40d

openshift-marketplace marketplace-operator-6874dc8464-lmlvx 1m (0%) 0 (0%) 5Mi (0%) 0 (0%) 40d

openshift-multus multus-4xhrh 10m (0%) 0 (0%) 65Mi (0%) 0 (0%) 40d

openshift-multus multus-additional-cni-plugins-62lw4 10m (0%) 0 (0%) 10Mi (0%) 0 (0%) 40d

openshift-multus multus-admission-controller-5f4676c68-29b2m 20m (0%) 0 (0%) 70Mi (0%) 0 (0%) 39d

openshift-multus network-metrics-daemon-kwd8t 20m (0%) 0 (0%) 120Mi (1%) 0 (0%) 40d

openshift-network-diagnostics network-check-source-7894f8cc69-88mhc 10m (0%) 0 (0%) 40Mi (0%) 0 (0%) 40d

openshift-network-diagnostics network-check-target-knh9x 10m (0%) 0 (0%) 15Mi (0%) 0 (0%) 40d

openshift-network-node-identity network-node-identity-ffl7r 20m (0%) 0 (0%) 100Mi (1%) 0 (0%) 40d

openshift-network-operator network-operator-fd55479ff-6ld9p 10m (0%) 0 (0%) 50Mi (0%) 0 (0%) 40d

openshift-oauth-apiserver apiserver-5d49456867-jpzrt 150m (3%) 0 (0%) 200Mi (2%) 0 (0%) 40d

openshift-operator-lifecycle-manager catalog-operator-fc994d6b6-tj8h5 10m (0%) 0 (0%) 80Mi (0%) 0 (0%) 40d

openshift-operator-lifecycle-manager olm-operator-75bbbb5559-zft8l 10m (0%) 0 (0%) 160Mi (1%) 0 (0%) 40d

openshift-operator-lifecycle-manager package-server-manager-7c55fcf5f7-4dqpc 10m (0%) 0 (0%) 50Mi (0%) 0 (0%) 40d

openshift-operator-lifecycle-manager packageserver-999d54647-66s5p 10m (0%) 0 (0%) 50Mi (0%) 0 (0%) 40d

openshift-route-controller-manager route-controller-manager-8559f9c7cb-trktg 100m (2%) 0 (0%) 100Mi (1%) 0 (0%) 12m

openshift-sdn sdn-controller-b49gh 20m (0%) 0 (0%) 70Mi (0%) 0 (0%) 40d

openshift-sdn sdn-v64p2 110m (2%) 0 (0%) 220Mi (2%) 0 (0%) 40d

openshift-service-ca-operator service-ca-operator-56c579c485-cpwv7 10m (0%) 0 (0%) 80Mi (0%) 0 (0%) 40d

openshift-service-ca service-ca-6c5d767655-7xw57 10m (0%) 0 (0%) 120Mi (1%) 0 (0%) 40d

Allocated resources:

(Total limits may be over 100 percent, i.e., overcommitted.)

Resource Requests Limits

-------- -------- ------

cpu 2321m (61%) 0 (0%)

memory 7707Mi (90%) 0 (0%)

ephemeral-storage 0 (0%) 0 (0%)

hugepages-1Gi 0 (0%) 0 (0%)

hugepages-2Mi 0 (0%) 0 (0%)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal NodeHasSufficientMemory 40d (x8 over 40d) kubelet Node crc-d4g2s-master-0 status is now: NodeHasSufficientMemory

Normal NodeHasNoDiskPressure 40d (x8 over 40d) kubelet Node crc-d4g2s-master-0 status is now: NodeHasNoDiskPressure

Normal RegisteredNode 40d node-controller Node crc-d4g2s-master-0 event: Registered Node crc-d4g2s-master-0 in Controller

Normal RegisteredNode 40d node-controller Node crc-d4g2s-master-0 event: Registered Node crc-d4g2s-master-0 in Controller

Normal RegisteredNode 40d node-controller Node crc-d4g2s-master-0 event: Registered Node crc-d4g2s-master-0 in Controller

Normal RegisteredNode 40d node-controller Node crc-d4g2s-master-0 event: Registered Node crc-d4g2s-master-0 in Controller

Normal RegisteredNode 40d node-controller Node crc-d4g2s-master-0 event: Registered Node crc-d4g2s-master-0 in Controller

Normal RegisteredNode 40d node-controller Node crc-d4g2s-master-0 event: Registered Node crc-d4g2s-master-0 in Controller

Normal RegisteredNode 40d node-controller Node crc-d4g2s-master-0 event: Registered Node crc-d4g2s-master-0 in Controller

Normal RegisteredNode 40d node-controller Node crc-d4g2s-master-0 event: Registered Node crc-d4g2s-master-0 in Controller

Normal RegisteredNode 40d node-controller Node crc-d4g2s-master-0 event: Registered Node crc-d4g2s-master-0 in Controller

Normal RegisteredNode 40d node-controller Node crc-d4g2s-master-0 event: Registered Node crc-d4g2s-master-0 in Controller

Normal RegisteredNode 39d node-controller Node crc-d4g2s-master-0 event: Registered Node crc-d4g2s-master-0 in Controller

Normal NodeNotReady 39d node-controller Node crc-d4g2s-master-0 status is now: NodeNotReady

Normal Starting 39d kubelet Starting kubelet.

Normal NodeAllocatableEnforced 39d kubelet Updated Node Allocatable limit across pods

Normal NodeHasSufficientPID 39d (x7 over 39d) kubelet Node crc-d4g2s-master-0 status is now: NodeHasSufficientPID

Normal NodeHasNoDiskPressure 39d (x8 over 39d) kubelet Node crc-d4g2s-master-0 status is now: NodeHasNoDiskPressure

Normal NodeHasSufficientMemory 39d (x8 over 39d) kubelet Node crc-d4g2s-master-0 status is now: NodeHasSufficientMemory

Normal RegisteredNode 39d node-controller Node crc-d4g2s-master-0 event: Registered Node crc-d4g2s-master-0 in Controller

Normal RegisteredNode 39d node-controller Node crc-d4g2s-master-0 event: Registered Node crc-d4g2s-master-0 in Controller

Normal NodeNotReady 39d node-controller Node crc-d4g2s-master-0 status is now: NodeNotReady

Normal RegisteredNode 18m node-controller Node crc-d4g2s-master-0 event: Registered Node crc-d4g2s-master-0 in Controller

Normal RegisteredNode 10m node-controller Node crc-d4g2s-master-0 event: Registered Node crc-d4g2s-master-0 in Controller

In particular, notice the OS image reported above under “System Info” is Fedora CoreOS 38.20231027.3.2. Fedora CoreOS, also known as FCOS, is a container-optimized OS under the Fedora project designed to run containerized workloads at scale - in particular, it is the only supported operating system for OKD control plane nodes, while OKD worker nodes can run Fedora Server as well. Since OKD only supports Fedora as the underlying operating system, it is opinionated in this sense compared to upstream Kubernetes which works on most Linux distributions and even Windows (albeit with a limited subset of features) for Kubernetes worker nodes. Not surprisingly, the commercial OCP distribution downstream from OKD requires Red Hat Enterprise Linux CoreOS (RHCOS) for its control plane nodes and optionally RHEL for its worker nodes, for which both OS editions are their respective downstreams from Fedora.

Now let’s see how many pods are running on our cluster:

oc get pods --all-namespaces --no-headers | wc -l

Sample output:

69

That’s a lot of pods on a freshly installed cluster! Compare this to a fresh Minikube cluster which has only 7 pods. But when you recall that OpenShift is a full-fledged IDP as opposed to a minimal infrastructure layer, everything suddenly makes sense ;-)

View the available namespaces:

oc get namespaces

Sample output:

NAME STATUS AGE

default Active 40d

hostpath-provisioner Active 39d

kube-node-lease Active 40d

kube-public Active 40d

kube-system Active 40d

openshift Active 40d

openshift-apiserver Active 40d

openshift-apiserver-operator Active 40d

openshift-authentication Active 40d

openshift-authentication-operator Active 40d

openshift-cloud-controller-manager Active 40d

openshift-cloud-controller-manager-operator Active 40d

openshift-cloud-credential-operator Active 40d

openshift-cloud-network-config-controller Active 40d

openshift-cluster-machine-approver Active 40d

openshift-cluster-samples-operator Active 40d

openshift-cluster-storage-operator Active 40d

openshift-cluster-version Active 40d

openshift-config Active 40d

openshift-config-managed Active 40d

openshift-config-operator Active 40d

openshift-console Active 40d

openshift-console-operator Active 40d

openshift-console-user-settings Active 40d

openshift-controller-manager Active 40d

openshift-controller-manager-operator Active 40d

openshift-dns Active 40d

openshift-dns-operator Active 40d

openshift-etcd Active 40d

openshift-etcd-operator Active 40d

openshift-host-network Active 40d

openshift-image-registry Active 40d

openshift-infra Active 40d

openshift-ingress Active 40d

openshift-ingress-canary Active 40d

openshift-ingress-operator Active 40d

openshift-kni-infra Active 40d

openshift-kube-apiserver Active 40d

openshift-kube-apiserver-operator Active 40d

openshift-kube-controller-manager Active 40d

openshift-kube-controller-manager-operator Active 40d

openshift-kube-scheduler Active 40d

openshift-kube-scheduler-operator Active 40d

openshift-kube-storage-version-migrator Active 40d

openshift-kube-storage-version-migrator-operator Active 40d

openshift-machine-api Active 40d

openshift-machine-config-operator Active 40d

openshift-marketplace Active 40d

openshift-monitoring Active 40d

openshift-multus Active 40d

openshift-network-diagnostics Active 40d

openshift-network-node-identity Active 40d

openshift-network-operator Active 40d

openshift-node Active 40d

openshift-nutanix-infra Active 40d

openshift-oauth-apiserver Active 40d

openshift-openstack-infra Active 40d

openshift-operator-lifecycle-manager Active 40d

openshift-operators Active 40d

openshift-ovirt-infra Active 40d

openshift-route-controller-manager Active 40d

openshift-sdn Active 40d

openshift-service-ca Active 40d

openshift-service-ca-operator Active 40d

openshift-user-workload-monitoring Active 40d

openshift-vsphere-infra Active 40d

Again, that’s a lot. Apart from the Kubernetes system namespaces (default, kube-system, kube-node-lease, kube-public) and hostpath-provisioner which contains a CSI driver for dynamic provisioning of storage (think PVCs), the other 60 or so namespaces starting with openshift* are OpenShift-reserved namespaces for running critical OpenShift cluster components and services.

View the services in the default namespace:

oc get services

Sample output:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.217.4.1 <none> 443/TCP 40d

openshift ExternalName <none> kubernetes.default.svc.cluster.local <none> 40d

Not much different from a fresh Kubernetes install, except the addition of the openshift service which is an ExternalName pointing to the kubernetes service, i.e. a CNAME alias from the DNS perspective.

Let’s try out an NGINX workload. Create an NGINX deployment with 2 replicas and expose port 80 for HTTP traffic:

oc create deploy nginx --image=nginx --replicas=2 --port=80

Expose this deployment as a service:

oc expose deploy nginx

View the deployment and corresponding service:

oc get svc,deploy -l app=nginx

Sample output:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/nginx ClusterIP 10.217.5.118 <none> 80/TCP 54s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx 2/2 2 2 60s

Now run a pod with cURL pre-installed and use it to send a cURL request to our NGINX deployment:

oc run curlpod --image=curlimages/curl -- sleep infinity

oc wait --for=condition=Ready pods --all --timeout=300s

oc exec curlpod -- curl -s nginx

Sample output of the last command:

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

Let’s go further and forward NGINX locally at port 8080 with port-forward:

oc port-forward svc/nginx 8080:80

In a new terminal window, make oc available for your session, then follow the NGINX logs to observe new requests coming in in the next step:

eval $(crc oc-env)

oc logs -f -l app=nginx

Now open a web browser and visit localhost:8080. Refresh the page 1 or 2 times.

Here’s what we get in our logs:

::1 - - [11/Jan/2024:12:54:05 +0000] "GET / HTTP/1.1" 200 615 "-" "Mozilla/5.0 (X11; Linux x86_64; rv:121.0) Gecko/20100101 Firefox/121.0" "-"

2024/01/11 12:54:05 [error] 30#30: *7 open() "/usr/share/nginx/html/favicon.ico" failed (2: No such file or directory), client: ::1, server: localhost, request: "GET /favicon.ico HTTP/1.1", host: "localhost:8080", referrer: "http://localhost:8080/"

::1 - - [11/Jan/2024:12:54:05 +0000] "GET /favicon.ico HTTP/1.1" 404 153 "http://localhost:8080/" "Mozilla/5.0 (X11; Linux x86_64; rv:121.0) Gecko/20100101 Firefox/121.0" "-"

::1 - - [11/Jan/2024:12:54:13 +0000] "GET / HTTP/1.1" 304 0 "-" "Mozilla/5.0 (X11; Linux x86_64; rv:121.0) Gecko/20100101 Firefox/121.0" "-"

::1 - - [11/Jan/2024:12:54:14 +0000] "GET / HTTP/1.1" 304 0 "-" "Mozilla/5.0 (X11; Linux x86_64; rv:121.0) Gecko/20100101 Firefox/121.0" "-"

::1 - - [11/Jan/2024:12:55:04 +0000] "GET / HTTP/1.1" 304 0 "-" "Mozilla/5.0 (X11; Linux x86_64; rv:121.0) Gecko/20100101 Firefox/121.0" "-"

A 200 OK response on the initial visit by our web browser (Firefox in my case) plus a failed attempt to fetch the favicon (since we don’t have one), then 304 Not Modified responses for the subsequent reloads which prevents NGINX from sending the contents of the same webpage over and over, improving response times and reducing the amount of network traffic.

Hopefully all of this is sufficient to convince you that OpenShift is in fact Kubernetes and oc is kubectl.

Let’s delete our NGINX deployment and associated resources - close the 2nd terminal window, return to the 1st one then press Ctrl+C to stop the port forwarding and run the following commands:

oc delete svc nginx

oc delete deploy nginx

oc delete po curlpod

Before we move on to the next section, let’s see how oc is more than just kubectl by inspecting a command that only exists with oc - feel free to run the command as well to view associated help information:

oc adm policy add-scc-to-user --help

Sample output:

Add a security context constraint to users or a service account.

Examples:

# Add the 'restricted' security context constraint to user1 and user2

oc adm policy add-scc-to-user restricted user1 user2

# Add the 'privileged' security context constraint to serviceaccount1 in the

current namespace

oc adm policy add-scc-to-user privileged -z serviceaccount1

Options:

--allow-missing-template-keys=true:

If true, ignore any errors in templates when a field or map key is

missing in the template. Only applies to golang and jsonpath output

formats.

--dry-run='none':

Must be "none", "server", or "client". If client strategy, only print

the object that would be sent, without sending it. If server strategy,

submit server-side request without persisting the resource.

-o, --output='':

Output format. One of: (json, yaml, name, go-template,

go-template-file, template, templatefile, jsonpath, jsonpath-as-json,

jsonpath-file).

-z, --serviceaccount=[]:

service account in the current namespace to use as a user

--show-managed-fields=false:

If true, keep the managedFields when printing objects in JSON or YAML

format.

--template='':

Template string or path to template file to use when -o=go-template,

-o=go-template-file. The template format is golang templates

[http://golang.org/pkg/text/template/#pkg-overview].

Usage:

oc adm policy add-scc-to-user SCC (USER | -z SERVICEACCOUNT) [USER ...]

[flags] [options]

Use "oc options" for a list of global command-line options (applies to all

commands).

This command add-scc-to-user is responsible for adding a security context constraint (SCC) to a given user or service account. SCCs, as its name suggests, is a form of mandatory access control (MAC) that places constraints on the security context that a Pod running as a particular user or service account can have, essentially SELinux but for OpenShift.

Exploring our cluster with the OpenShift web console

So far, we’ve only interacted with OpenShift via the command line, but what makes OpenShift really shine is its out-of-the-box integrated web console which helps in quickly visualizing the state of the entire cluster plus individual microservices, greatly easing the burden on developer and operations staff - no more 20 questions with kubectl ;-)

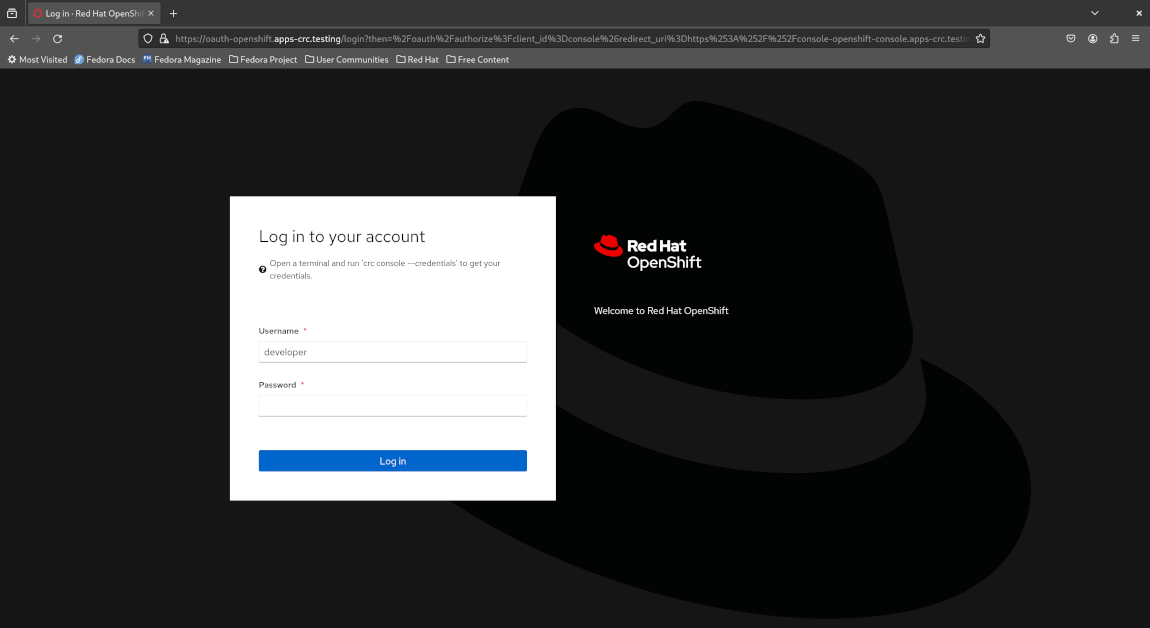

Point your browser to console-openshift-console.apps-crc.testing and ignore the certificate warnings reported by your browser, which should bring you to the OpenShift login portal.

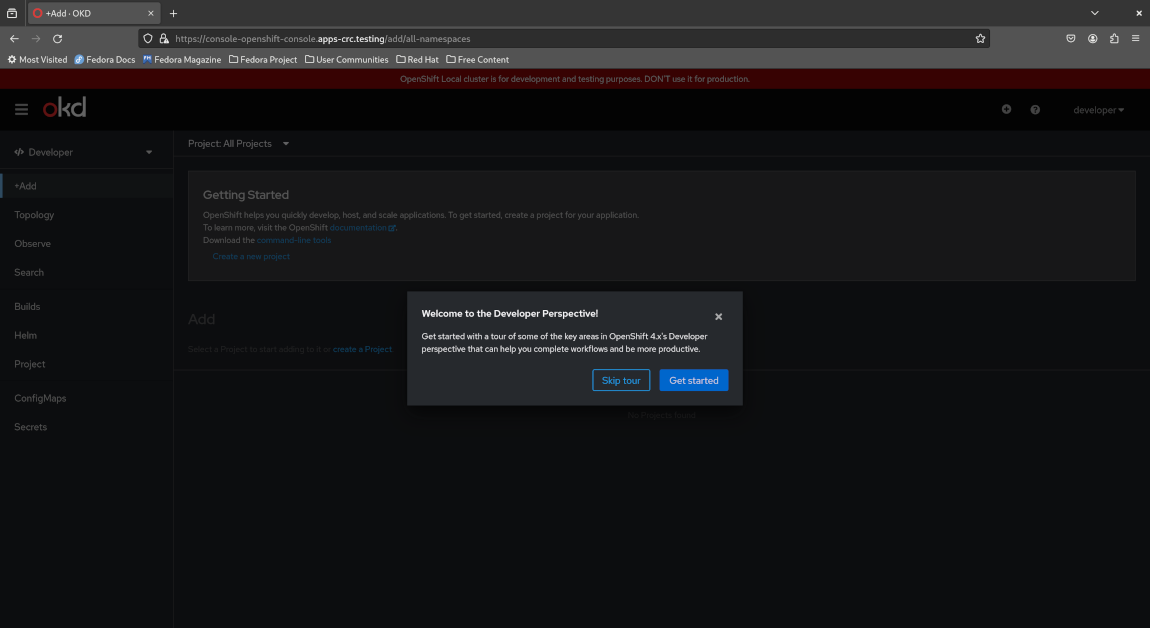

Now log in as a developer with developer as both the username and password, which should bring you to the Developer dashboard and greet you with a guided tour.

Check out the guided tour and feel free to explore the Developer perspective of the OpenShift web console before we proceed with the next section.

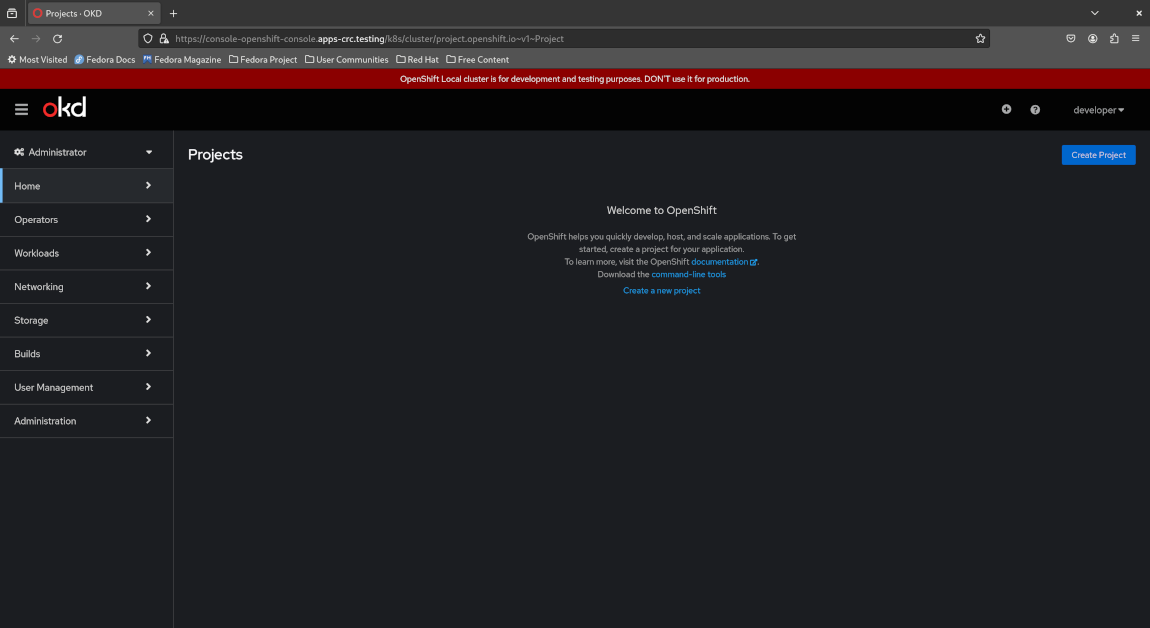

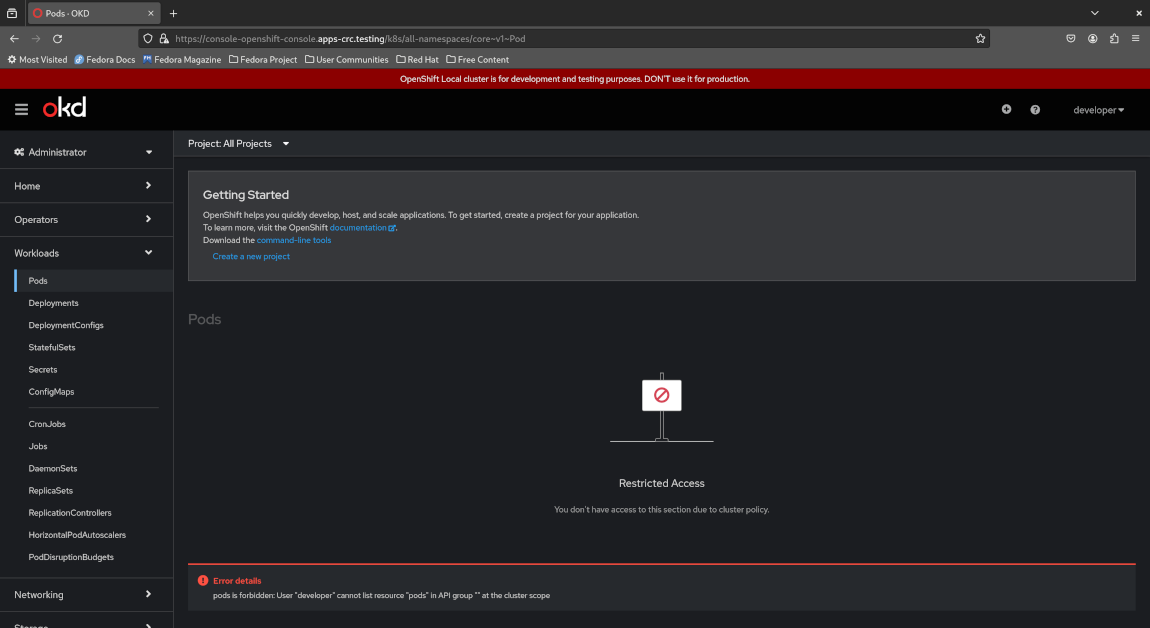

While still logged in as a developer, click the “Developer” dropdown to the top left and switch to the “Administrator” perspective.

Notice how the administrator dashboard only prompts you to create a new project and doesn’t display any useful information about the cluster itself. For example, if you select “Workloads > Pods”, it’ll inform you that your access is restricted and you are not able to list all Pods running on the cluster. This is role-based access control (RBAC) in action for which OpenShift provides sane defaults for unprivileged users and greatly eases the management of RBAC compared to Kubernetes where users, groups, (cluster) roles and (cluster) role bindings must be created and defined manually.

Now log out of the developer account and switch to the kubeadmin account which has cluster-wide administrator access, using the password you noted earlier. In case you forgot to take note of the password, you may retrieve it with crc as follows:

crc console --credentials

Sample output (password elided for security reasons):

To login as a regular user, run 'oc login -u developer -p developer https://api.crc.testing:6443'.

To login as an admin, run 'oc login -u kubeadmin -p XXXXX-XXXXX-XXXXX-XXXXX https://api.crc.testing:6443'

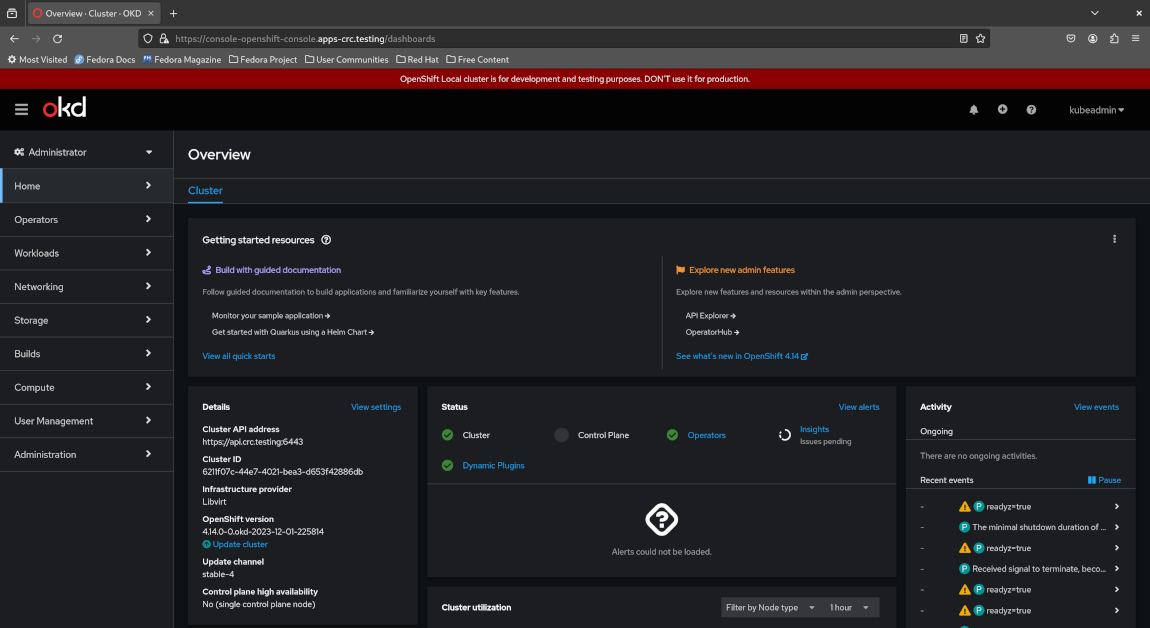

Once logged in as kubeadmin, notice how you are able to immediately view a summary of the entire cluster via the Administrator dashboard.

Again, feel free to poke around and get a feel of the Administrator perspective in OpenShift before we proceed to the next section.

Setting up Argo CD with the Operator

Another aspect of OpenShift that really shines is the extensive use of software operators to install and manage the lifecycle of cluster-level applications and add-on features, all through the Operator Hub available from the web console which greatly simplifies the operator installation process by making it only a few clicks away. Software operators encode human operational knowledge of managing a particular application into a software controller that performs these operational tasks in a fully automated manner, thus freeing the cluster administrator from the mundane task of managing that particular application and allowing them to focus on tasks that deliver actual business value.

While the operator pattern is not unique to OpenShift (see Canonical’s Juju charms for another example), the concept of Kubernetes operators originated from OpenShift and was donated by Red Hat to the CNCF as the Operator Framework which is now in the CNCF incubator and available to be installed on any standard Kubernetes cluster. The main value proposition that OpenShift offers in this regard is the native support for operators baked into OpenShift during cluster installation time and the ease of installing them via the web console as opposed to crafting the required custom resource definitions (CRDs) by hand and applying them via the command line.

In the following section, we will install the Argo CD operator on OpenShift via the web console and create an Argo CD cluster by defining a minimal ArgoCD custom resource, which will automate the installation and complete lifecycle management of the entire Argo CD instance in a fully transparent fashion. Argo CD is a declarative, GitOps continuous delivery tool for Kubernetes which eases the implementation of modern application deployment patterns such as blue-green and canary deployments via Git-centric workflows, though we won’t focus on Argo CD itself for the purposes of this lab.

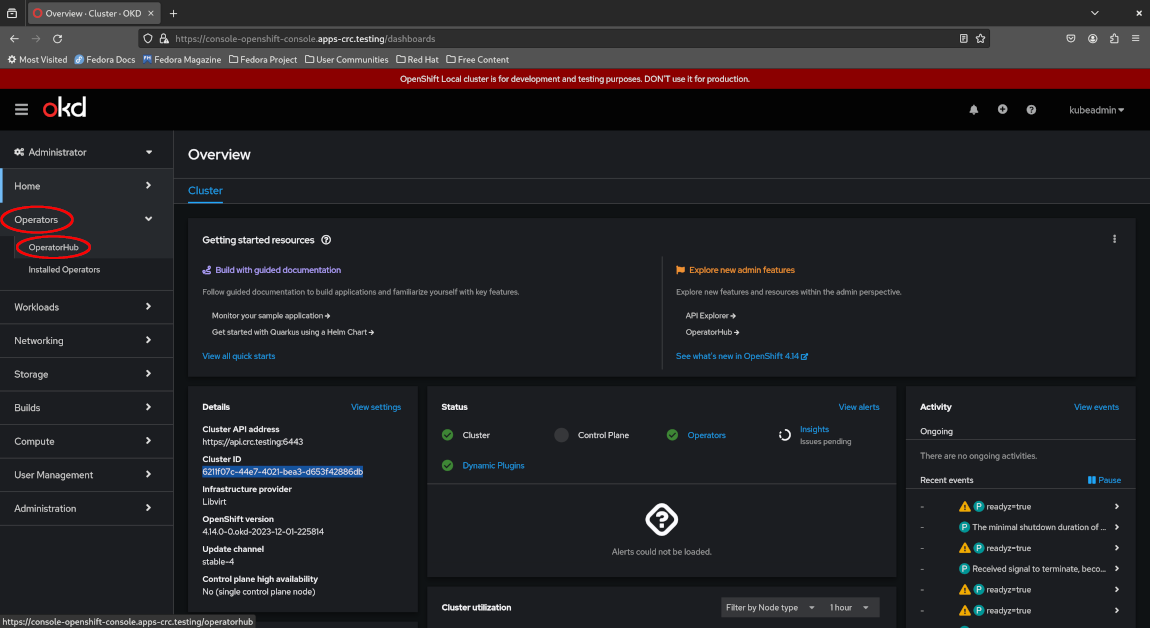

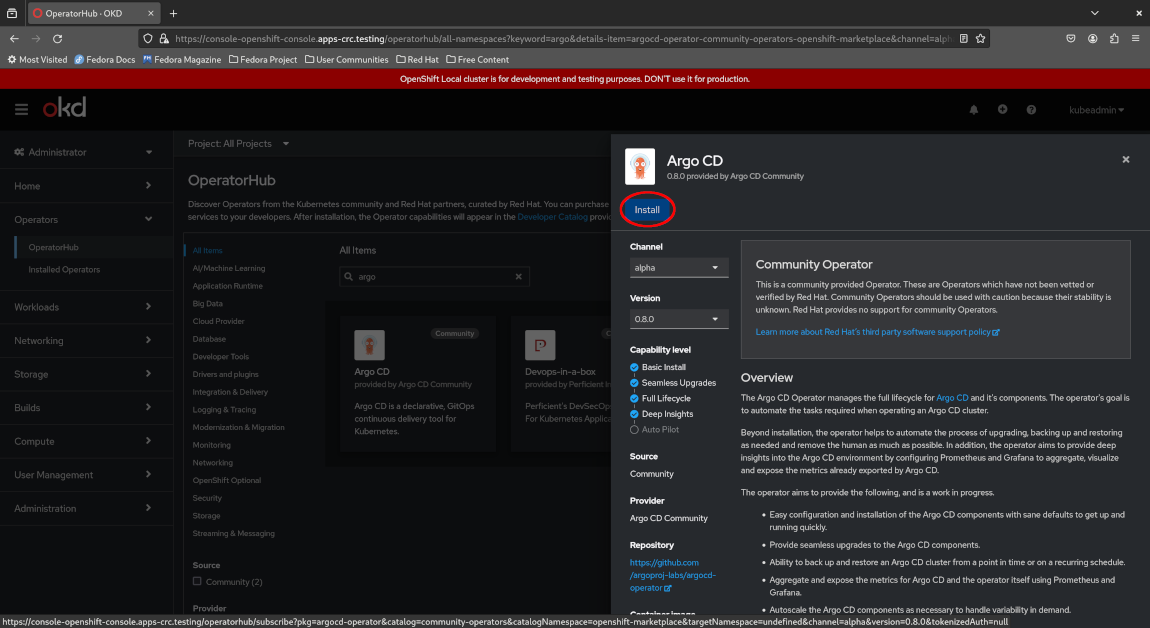

Log in to the web console as the cluster administrator kubeadmin, then select “Operators > OperatorHub”.

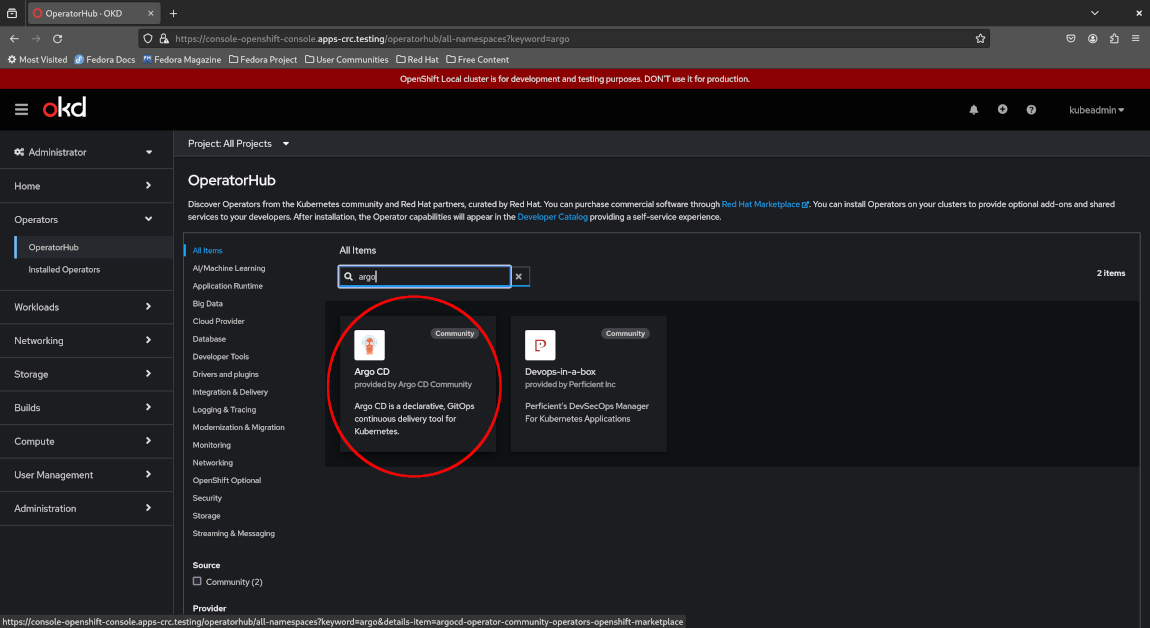

Next, search for “Argo CD” and select the application.

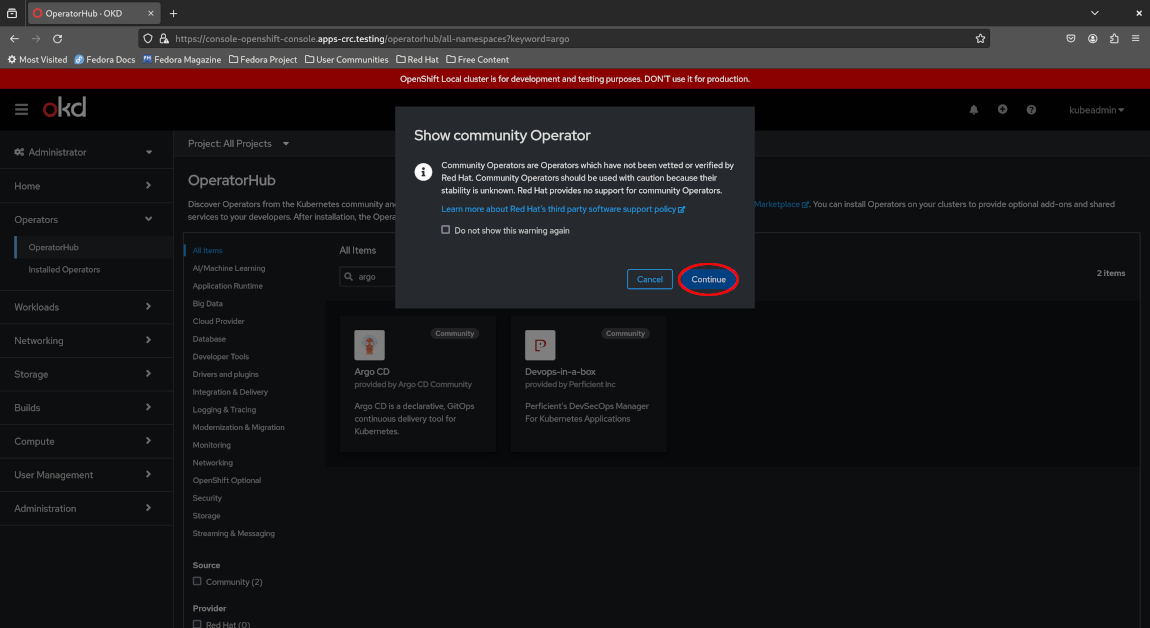

OpenShift will warn that this is a community Operator which is not officially supported by Red Hat. Acknowledge the warning by clicking “Continue”.

View the description for the Argo CD operator and click “Install”.

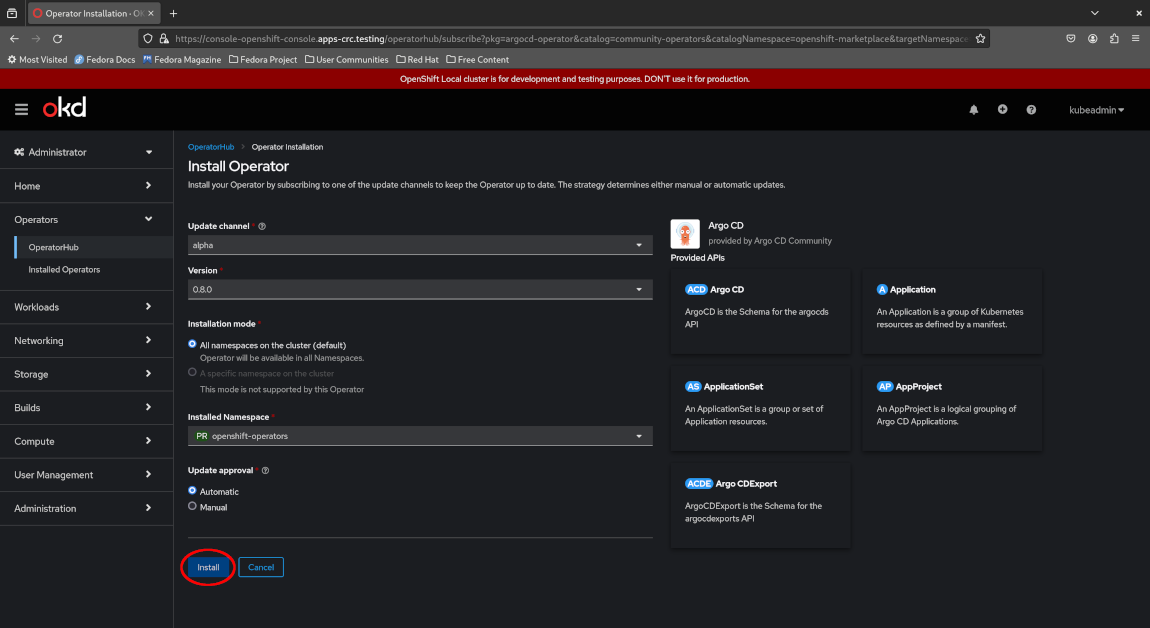

Before confirming the installation, OpenShift allows you to customize a few options such as selecting the version to install and the channel to install from. Leave the options at their defaults and click “Install” to proceed with the installation.

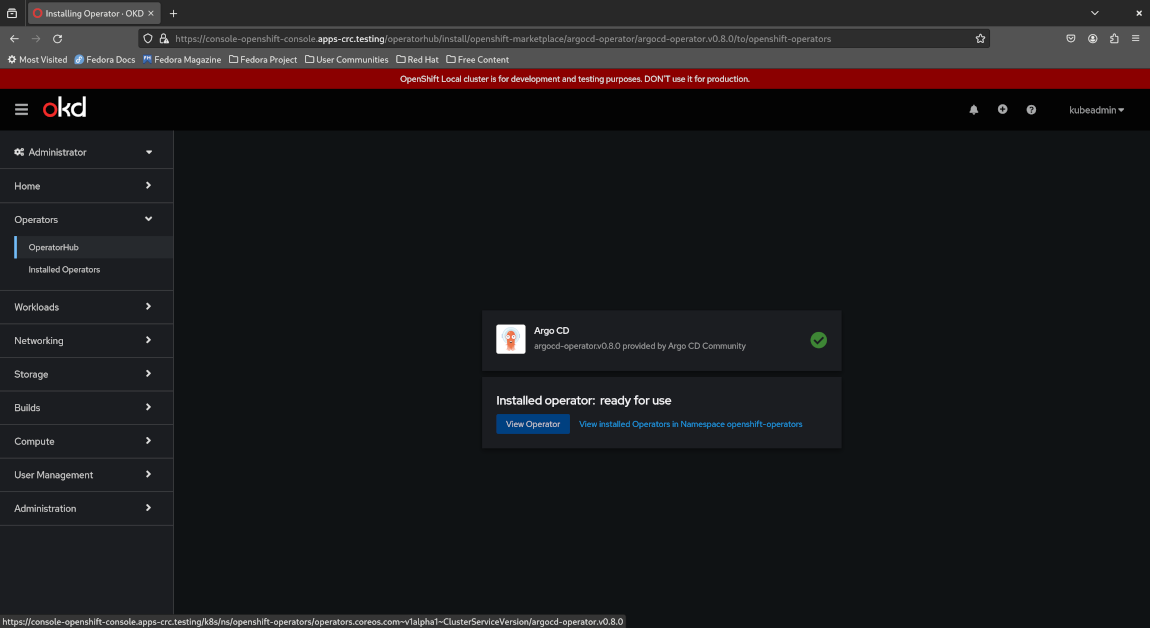

Now wait a few minutes while the operator is being installed until the page below indicates “Installed operator: ready for use” and you should see a green checkmark.

Congratulations - you have successfully installed your first operator on OpenShift!

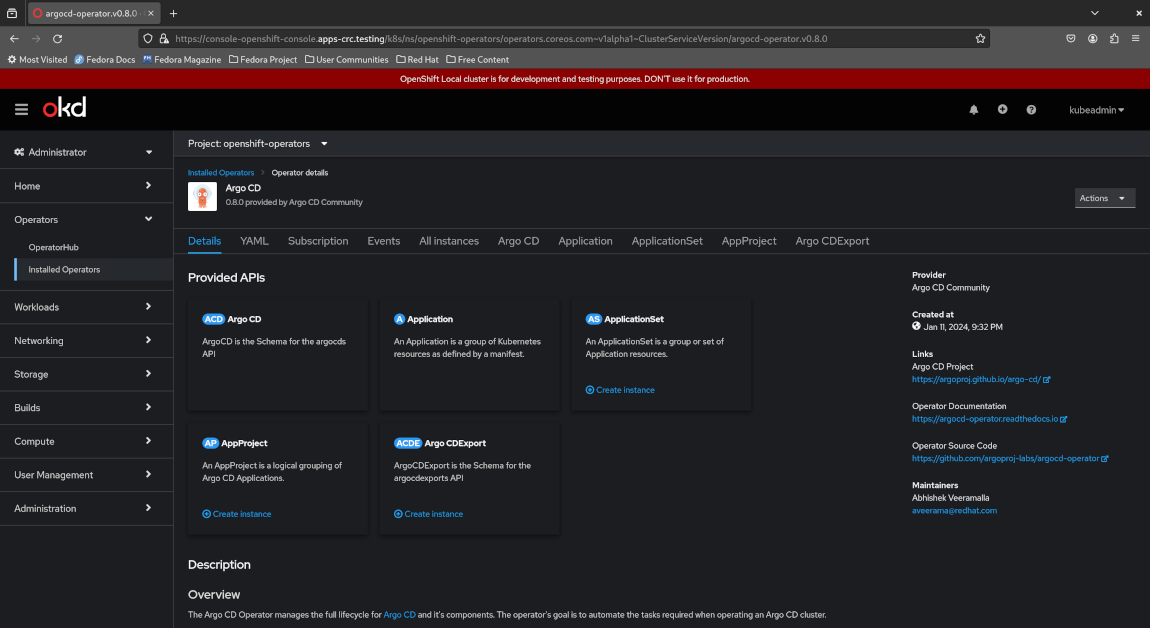

Click the “View operator” button at the middle of the page to view the details of your newly installed Argo CD operator.

Notice the provided API ArgoCD by the Argo CD operator to the top left. This is a high-level CRD defined by the operator representing an entire Argo CD instance, so all we need to do is create a custom resource of type ArgoCD and apply it to the cluster, then the operator will automatically deploy an Argo CD instance and manage the entire lifecycle of that instance for us - no manual operation required. This also implies that we can create multiple Argo CD instances in different namespaces and have the operator manage all of them for us transparently, by simply defining multiple ArgoCD custom resources and applying them to our OpenShift cluster.

Open a new terminal window, make oc available in our PATH, then create the argocd namespace for our Argo CD instance and create a corresponding minimal ArgoCD custom resource by leaving the .spec field empty.

eval $(crc oc-env)

oc create ns argocd

oc apply -f - << EOF

apiVersion: argoproj.io/v1beta1

kind: ArgoCD

metadata:

name: argocd

namespace: argocd

spec: {}

EOF

Now wait up to 5 minutes for the Argo CD instance to become available:

oc -n argocd wait \

--for=jsonpath='{.status.phase}'=Available \

argocd \

--all \

--timeout=300s

Sample output:

argocd.argoproj.io/argocd condition met

Let’s see the pods created for our Argo CD instance:

oc -n argocd get po

Sample output:

NAME READY STATUS RESTARTS AGE

argocd-application-controller-0 1/1 Running 0 14m

argocd-redis-9c4df56bc-mqh8d 1/1 Running 0 14m

argocd-repo-server-689fdf7d9d-ngpn8 1/1 Running 0 14m

argocd-server-76c4849577-j7l5n 1/1 Running 0 14m

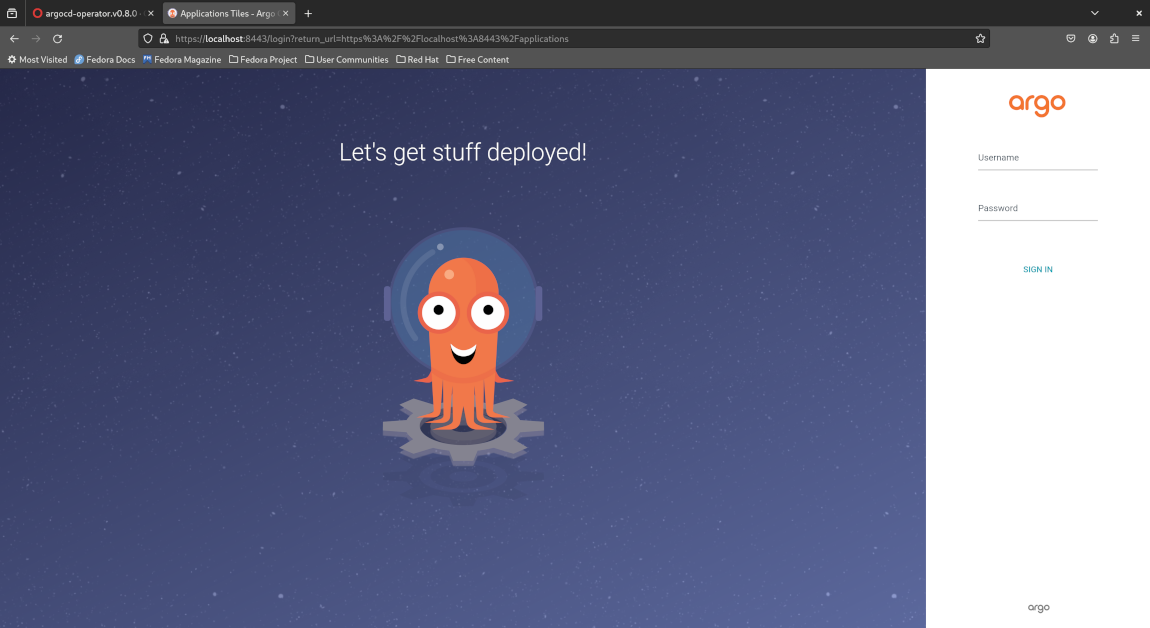

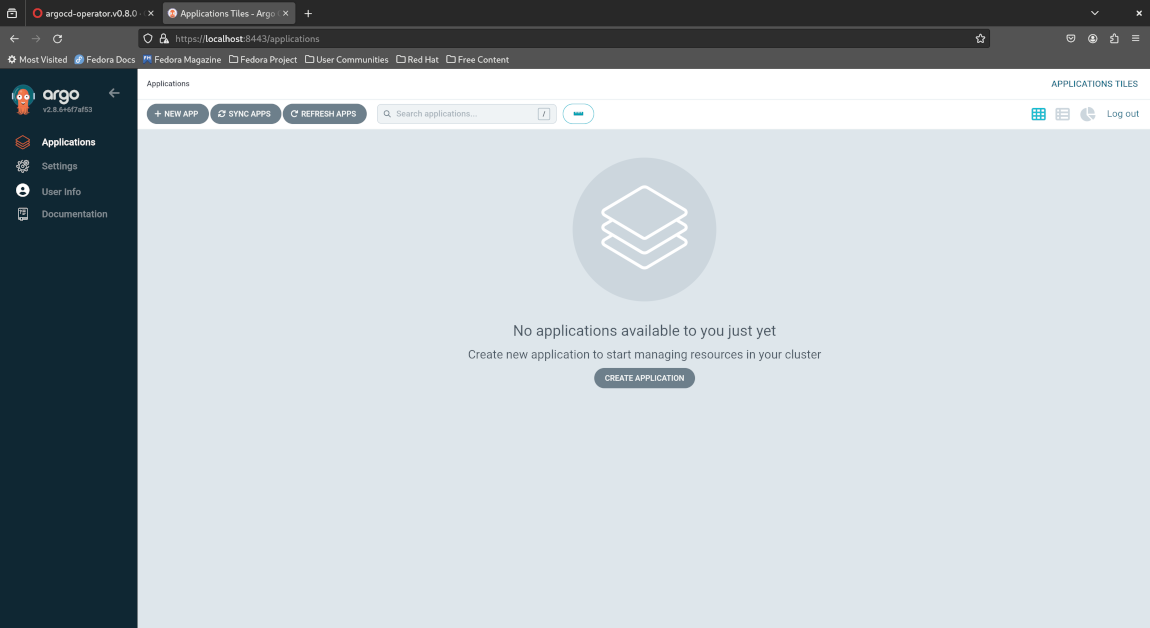

The Argo CD web console is available as the argocd-server Service which is a ClusterIP by default and therefore only accessible from within the cluster. Forward this service locally to port 8443, then open localhost:8443 in a new browser window and ignore any certificate warnings reported by your browser which presents us with a login portal.

oc -n argocd port-forward svc/argocd-server 8443:443

The login username is admin and the initial login password is stored in the secret argocd-cluster within the key admin.password.

Open a new terminal window (again) and make oc available in our PATH.

eval $(crc oc-env)

Now fetch the initial login password, first by using the kubectl way of doing things.

oc -n argocd get secret \

argocd-cluster \

-o jsonpath='{.data.admin\.password}' | \

base64 -d -

Notice how this command is difficult to read and understand since it doesn’t tell us what we want to achieve (extract the initial login password); instead, it only tells us how to achieve it (get the .data.admin\.password field in the argocd-cluster secret and decode it as Base64).

Fortunately, oc provides a more readable alternative to extracting the initial login password from our secret:

oc -n argocd extract secret/argocd-cluster --to=-

Finally, log in to the Argo CD web console with the username admin and the password as output by the command above which should bring us to the Argo CD dashboard.

Cleaning up

Tearing down our OKD cluster with CRC is simple - simply run:

crc delete

Answer y when prompted.

Concluding remarks and going further

OpenShift is a full-fledged IDP developed by Red Hat on top of Kubernetes which greatly enhances the developer experience, thereby improving developer productivity, reducing time to market and enabling enterprises to focus on delivering business value instead of performing the undifferentiated heavy lifting of building and maintaining their own IDP. Throughout the lab, we saw 2 major value-added features of OpenShift which makes all of this possible, namely the integrated OpenShift web console and the ability to install software controllers known as operators with a few clicks which manage the entire lifecycle of applications on behalf of the cluster administrator.

This article is by no means a comprehensive introduction to OpenShift. If you would like to dive into OpenShift, consider the following resources:

- Introduction to Containers, Kubernetes and OpenShift, a self-paced online course by IBM on edX available at no cost (Red Hat is a subsidiary of IBM)

- The Red Hat OpenShift skill paths recommended by Red Hat

Additionally, if you would like to dive into Argo CD, consider the following resources:

- Read through the Argo CD documentation and try out some of the tutorials there

- The Certified Argo Project Associate (CAPA) exam and associated training material offered by the Linux Foundation

I hope you enjoyed this article and stay tuned for new content ;-)

![[Valid RSS]](/assets/images/valid-rss-rogers.png)

![[Valid Atom 1.0]](/assets/images/valid-atom.png)